Publications

Publications in reversed chronological order.

2025

-

Do Large Language Models Reason Causally Like Us? Even Better?H. M. Dettki, Brenden M. Lake, Charley M. Wu, and Bob RehderIn Proceedings of the Annual Meeting of the Cognitive Science Society (CogSci), 2025

Do Large Language Models Reason Causally Like Us? Even Better?H. M. Dettki, Brenden M. Lake, Charley M. Wu, and Bob RehderIn Proceedings of the Annual Meeting of the Cognitive Science Society (CogSci), 2025Causal reasoning is a core component of intelligence. Large language models (LLMs) have shown impressive capabilities in generating human-like text, raising questions about whether their responses reflect true understanding or statistical patterns. We compared causal reasoning in humans and four LLMs using tasks based on collider graphs, rating the likelihood of a query variable occurring given evidence from other variables. We find that LLMs reason causally along a spectrum from human-like to normative inference, with alignment shifting based on model, context, and task. Overall, GPT-4o and Claude showed the most normative behavior, including "explaining away", whereas Gemini-Pro and GPT-3.5 did not. Although all agents deviated from the expected independence of causes - Claude the least - they exhibited strong associative reasoning and predictive inference when assessing the likelihood of the effect given its causes. These findings underscore the need to assess AI biases as they increasingly assist human decision-making.

@inproceedings{dettki2025largelanguagemodelsreason, title = {Do Large Language Models Reason Causally Like Us? Even Better?}, author = {Dettki, H. M. and Lake, Brenden M. and Wu, Charley M. and Rehder, Bob}, booktitle = {Proceedings of the Annual Meeting of the Cognitive Science Society (CogSci)}, year = {2025}, } -

Reasoning Strategies and Robustness in Language Models: Contrasting Reasoning in Humans and LLMs On a Causal BenchmarkH. M. DettkiIn Master’s Thesis in Machine Learning, University of Tuebingen, 2025

Reasoning Strategies and Robustness in Language Models: Contrasting Reasoning in Humans and LLMs On a Causal BenchmarkH. M. DettkiIn Master’s Thesis in Machine Learning, University of Tuebingen, 2025Large Language Models (LLMs) have made significant strides in natural language processing and exhibit impressive capabilities in natural language understanding and generation. However, to what degree language models are able to perform causal reasoning, often considered a hallmark of intelligence, and whether their responses align with human reasoning remains underexplored. However, how they reason, and to what degree they align with human reasoning remains underexplored. To this end, we evaluate over 20 LLMs on 11 causal reasoning tasks formalized by a collider graph and compare their performance to that of humans on the same tasks. We also evaluate to what extent causal reasoning depends on knowledge about common causal relationships in the natural world, on the degree of irrelevant information in the prompt, and on the reasoning budget. We find that most LLMs are aligned with human reasoning up to ceiling effects with chain of thought increasing alignment for LLMs that were misaligned under single-shot prompting. We further find that most LLMs reason in a more deterministic, rule-like way than humans. Chain-of-thought prompting increases reasoning consistency and, under noisy prompts, also pushes some LLMs’ reasoning from a probabilistic to a more deterministic regime. Reasoning is robust to replacing real-world content with abstract placeholders but degrades when prompts are injected with irrelevant text; in these cases, chain-of-thought recovers much of the lost performance. Across experiments, many models exceed human baselines on qualitative reasoning signatures such as explaining-away and Markov compliance, which humans typically exhibit only weakly or violate. Together, we find a divergence in reasoning style: humans rely more on probabilistic judgments, whereas many LLMs default to near-deterministic rules. Such determinism can enhance reliability and augment human reasoning by providing stable, rule-like outputs. Yet it also risks failure in real-world settings where uncertainty is intrinsic, underscoring the need to better characterize LLM reasoning strategies to guide their safe and effective application.

@inproceedings{dettki2025thesis, title = {Reasoning Strategies and Robustness in Language Models: Contrasting Reasoning in Humans and LLMs On a Causal Benchmark}, author = {Dettki, H. M.}, booktitle = {Master's Thesis in Machine Learning, University of Tuebingen}, year = {2025}, } -

Causal Strengths and Leaky Beliefs: Interpreting LLM Reasoning via Noisy-OR Causal Bayes NetsHanna Dettki, Charley Wu, Brenden Lake, and Bob Rehder2025

Causal Strengths and Leaky Beliefs: Interpreting LLM Reasoning via Noisy-OR Causal Bayes NetsHanna Dettki, Charley Wu, Brenden Lake, and Bob Rehder2025@misc{dettki2025causalstrengthsleakybeliefs, title = {Causal Strengths and Leaky Beliefs: Interpreting LLM Reasoning via Noisy-OR Causal Bayes Nets}, author = {Dettki, Hanna and Wu, Charley and Lake, Brenden and Rehder, Bob}, booktitle = {WiML Workshop at NeurIPS}, year = {2025}, url = {https://arxiv.org/abs/2512.11909}, }

2024

-

Plenoptic: A platform for synthesizing model-optimized visual stimuliEdoardo Balzani, Kathryn Bonnen, William Broderick, Hanna M. Dettki, Lyndon Duong, and 6 more authors2024

Plenoptic: A platform for synthesizing model-optimized visual stimuliEdoardo Balzani, Kathryn Bonnen, William Broderick, Hanna M. Dettki, Lyndon Duong, and 6 more authors2024@software{plenoptic, author = {Balzani, Edoardo and Bonnen, Kathryn and Broderick, William and Dettki, Hanna M. and Duong, Lyndon and Fiquet, Pierre-Étienne and Herrera-Esposito, Daniel and Parthasarathy, Nikhil and Simoncelli, Eero and Yerxa, Thomas and Zhao, Xinyuan}, doi = {10.5281/zenodo.10151130}, license = {MIT}, title = {{Plenoptic: A platform for synthesizing model-optimized visual stimuli}}, year = {2024}, } -

Do Large Language Models Understand Cause and Effect?Hanna Dettki, Charley Wu, Brenden Lake, and Bob Rehder2024

Do Large Language Models Understand Cause and Effect?Hanna Dettki, Charley Wu, Brenden Lake, and Bob Rehder2024@misc{Dettki2024DoLarge, author = {Dettki, Hanna and Wu, Charley and Lake, Brenden and Rehder, Bob}, title = {Do Large Language Models Understand Cause and Effect?}, booktitle = {WiML Workshop at NeurIPS}, year = {2024}, }

2019

-

Executable State Machines Derived from Structured Textual Requirements-Connecting Requirements and Formal System DesignBenedikt Walter, Jan Martin, Jonathan Schmidt, Hanna M. Dettki, and Stephan RudolphIn Proceedings of the 7th International Conference on Model-Driven Engineering and Software Development, 2019

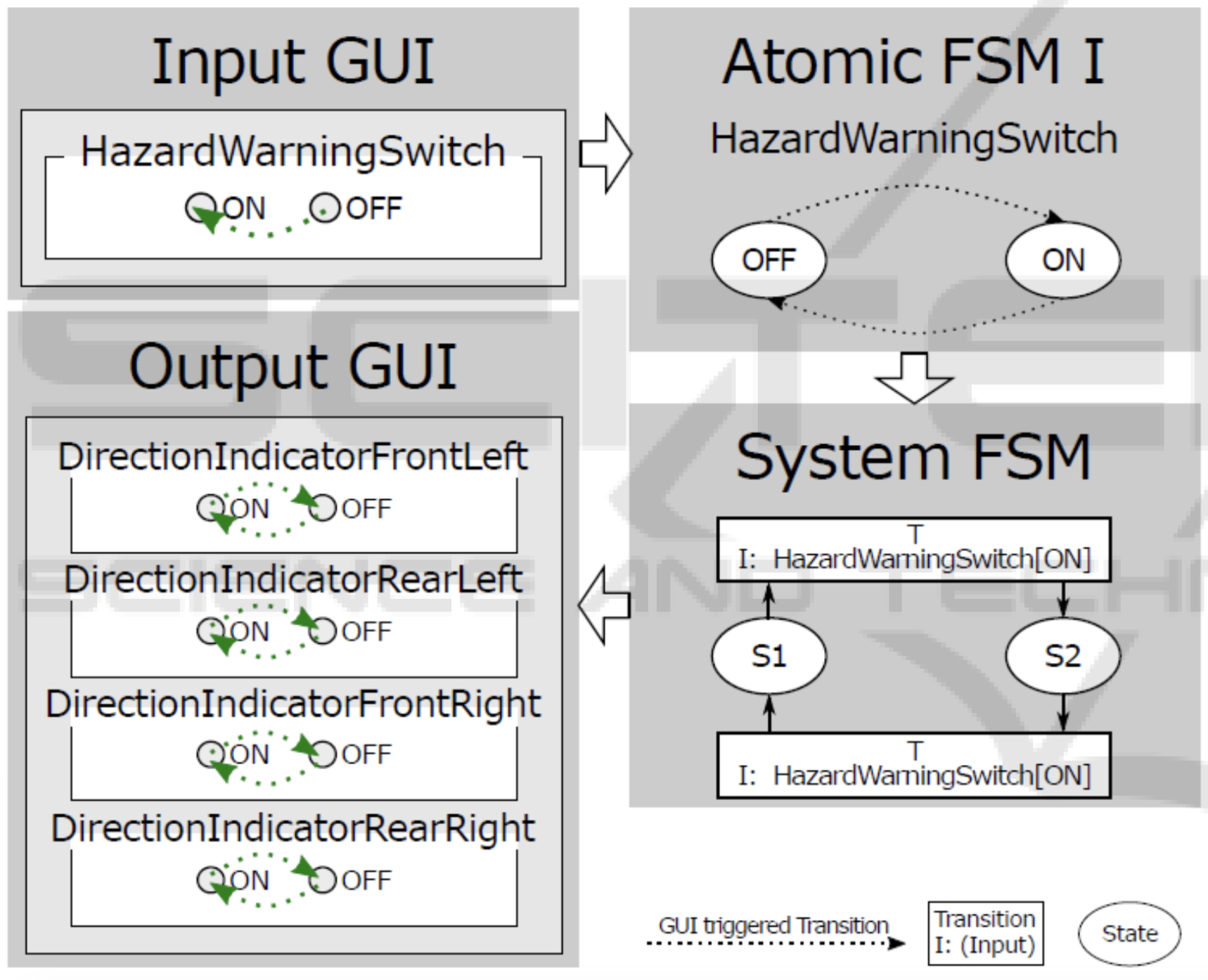

Executable State Machines Derived from Structured Textual Requirements-Connecting Requirements and Formal System DesignBenedikt Walter, Jan Martin, Jonathan Schmidt, Hanna M. Dettki, and Stephan RudolphIn Proceedings of the 7th International Conference on Model-Driven Engineering and Software Development, 2019There exists a gap between (textual) requirements specification and systems created in the system design process. System design, particular in automotive, is a tremendously complex process. The sheer number of requirements for a system is too high to be considered at once. In industrial contexts, complex systems are commonly created through many design iterations with numerous hardware samples and software versions build. System experts include many experience-based design decisions in the process. This approach eventually leads to a somewhat consistent system without formal consideration of requirements or a traceable design decision process. The process leaves a de facto gap between specification and system design. Ideally, requirements constrain the initial solution space and system design can choose between the design variants consistent with that reduced solution space. In reality, the true solution space is unknown and the effect of particular requirements on that solution space is a guessing game. Therefore, we want to propose a process chain that formally includes requirements in the system design process and generates an executable system model. Requirements documented as structured text are mapped into the logic space. Temporal logic allows generation of consistent static state machines. Extracting and modelling input/output signals of that state machine enables us to generate an executable system model, fully derived from its requirements. This bridges the existing gap between requirements specification and system design. The correctness and usefulness of this approach is shown in a case study on automotive systems at Daimler AG.

@inproceedings{walter2019executable, title = {Executable State Machines Derived from Structured Textual Requirements-Connecting Requirements and Formal System Design}, author = {Walter, Benedikt and Martin, Jan and Schmidt, Jonathan and Dettki, Hanna M. and Rudolph, Stephan}, booktitle = {Proceedings of the 7th International Conference on Model-Driven Engineering and Software Development}, pages = {193--200}, year = {2019}, }